Focus on generative AI series - Part 1

Generative AI has been a major topic since October 2022 and the release of ChatGPT 3.5 in October 2022. At Dydu, as an expert in conversational AI since 2009, we have followed the development of generative AI closely. In the course of our discussions with our customers, partners and prospects, we have found that the subject remains relatively complex to grasp. What’s more, the ecosystem is evolving very rapidly, which makes the task even more difficult. The aim of this series of articles entitled ‘Focus on generative AI’ is to review the fundamentals of LLMs, deconstruct certain preconceived ideas and provide some food for thought on how to use them.

What is an LLM?

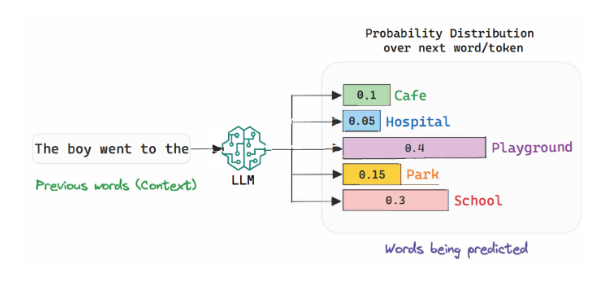

LLMs (Large Language Models) are machine learning models capable of understanding and generating texts in human language. They work by analysing huge datasets of text to learn to predict the words that will come next in a sentence. They then generate coherent responses based on these probabilities. An LLM systematically proposesthe most likelytoken according to its context, and this context is enriched with each new token added. This addition is called ‘inference’, the process of which we will see below.

These models use neural networks to detect relationships between words and sentences, enabling them to produce coherent, relevant texts. You can think of an LLM as an ultra-intelligent writing assistant, drawing on accumulated data from books, articles and online forums to provide relevant, well-constructed answers.

How does LLM work?

Through a process called ‘inference’, the model analyses sequences of words and uses probabilities to predict what will happen next. It continually enriches the context with each added word or phrase, improving the accuracy of its answers.

Let’s take a simple example. If you give the model the start of a sentence such as:

👉 “The sun rises in the east and…”. The LLM will draw on its vast knowledge to predict plausible completions such as ‘ sets in the west ’.

It is therefore important to provide the input prompt (initial context) with as much relevant information as possible, this is prompt engineering.

Source : The Philosophical Ledger

The 6 key stages in learning an LLM

1 – Gathering, cleaning and splitting data: The first step in LLM training is to collect textual data from a variety of sources, such as books, press articles, online forums, etc. Once this data has been collected, it is cleaned to eliminate errors, inappropriate content and biases. Once this data has been collected, it is cleaned to eliminate errors, inappropriate content and bias. Finally, the data is broken down into small units (called tokens) that the model will analyse.

For example, GPT-3.5 was trained with 570 GB of text. This represents millions of sentences, analysed to understand the structure and relationships between words.

2 – Initial model configuration (transformed architecture): Once the data is ready, the model is configured. It is based on a specific architecture called transform, designed to process large quantities of textual information quickly and efficiently. At this stage, the size of the model, the number of processing layers and other technical parameters are also defined.

3 – Model training: This is the longest and most expensive stage. The LLM is trained to predict the next word in a sentence based on the previous words. The aim is to minimise errors over time. This process is called supervised learning : the model learns from the correct examples it is given.

Myth #1

Contrary to what you might think, an LLM like ChatGPT does not learn from the questions asked by users. Once the model has been trained, it is frozen, unless it is re-trained with new data.

4 – Checking the model: Once the training is complete, the model is tested. It is presented with new data that it has never seen before to check its ability to generalise and provide correct answers. Performance measures are used to ensure that the model works well before it is made available to users.

5 – Using the model: Once validated, the model can be deployed in different applications. For example, it can be used in chatbots, to generate content, or to provide answers to questions. The model does not improve at this stage: it simply uses what it has learned during its initial training.

6 – Improving the model: If necessary, the model can be re-trained or adjusted. This is called fine-tuning. This makes it possible to refine its responses in specific contexts or to add new data. But once deployed, it does not automatically learn from day-to-day interactions with users.

A few key concepts to help you understand LLM!

Hallucinations: One of the main limitations of LLMs is the phenomenon of ‘hallucinations’, where the model produces incorrect or misleading answers, but in a very convincing way. These errors can be problematic, particularly in professional contexts where accuracy is crucial.

Myth #2

Hallucinations can be avoided, but the reality is that they are inevitable due to their probability-based architecture, lack of deep understanding and the limitations of training data.

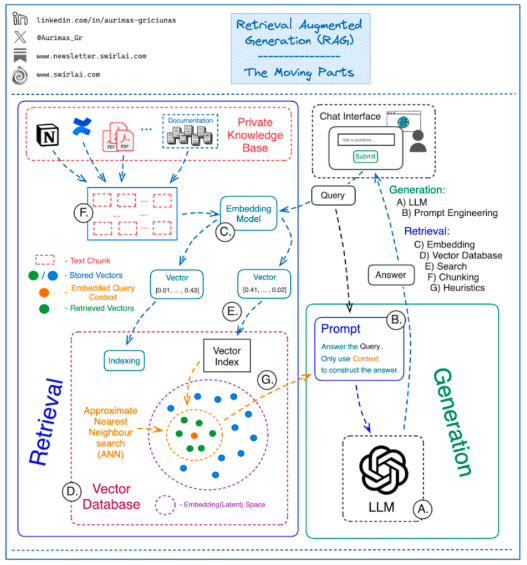

Retrieval Augmented Generation (RAG) Semantic indexing: This refers to textual content from a document database that is indexed and then ‘queried’ by a user question. This creates a prompt giving context to the LLM derived from the documents and the user’s question.

Fine tuning: This involves retraining an LLM that has already been trained with additional context information to specify certain responses.

Source : SuperAnnotate

Optimising the performance of generative AI

The performance of an LLM can be adjusted using several parameters:

- Temperature: The temperature is an adjustable value between 0 and 1 that can be used to manage the degree of creativity of the model. The higher the temperature (close to 1), the more varied and creative the responses.

- Max New Tokens: This parameter controls the maximum length of the responses generated.

- Top P and Top K: These values influence the diversity of words selected by the model to form a response, ensuring a balance between quality and variety.

- Context window: to generate a response, an LLM needs context. The context window is the maximum size of this context. The larger the context window, the higher the quality of the response, but the longer the generation time.

To conclude, this introduction to generative AI offers a clear overview of its potential and how it works. But it is only the beginning. In the next sections, we will explore the current uses and challenges of generative AI, in particular the limits of LLMs and their role in our digital tools. Finally, we will analyse the market for LLMs and their future in a rapidly evolving technological sector. A not-to-be-missed sequel to anticipate future developments!

👉 Stay tuned for the rest of the ‘Generative AI Focus’ series:

- Part 2: Uses and challenges of generative AI

- Part 3: What future for LLMs?

(Watch and report by Mathieu Changeat)