Focus on generative AI” series - Part 3

In the second part of our “Generative AI Focus” series, we looked at how companies can integrate language models effectively, without thinking that LLMs will solve every problem… Indeed, although they offer impressive capabilities, language models need to be used for targeted tasks, where their added value is real.

This article explores the limits and current uses of generative AI, examining the technical, ecological, economic and legal constraints, as well as the challenges that arise. Find out how to integrate these technologies responsibly, so as to maximize their potential without going overboard by (re)discovering Part 2: Limits, uses and challenges of generative AI.

The current market for generative AI

LLM suppliers

The generative AI market is booming, with major players such as Microsoft, Amazon, Meta and Google investing large sums in the development of these technologies.

- Microsoft has taken a notable lead by investing heavily in OpenAI, the creator of language models such as GPT-4. With an estimated commitment of over $13 billion, Microsoft is now integrating OpenAI’s capabilities into its flagship products, including the Office suite (via Copilot) and its Azure cloud platform, thus strengthening its position in generative AI.

- Amazon, meanwhile, has announced a strategic partnership with Anthropic, a key player in the sector, through an investment of up to $4 billion*. This agreement will enable Amazon to strengthen its cloud offering with solutions based on advanced AI models, while supporting the development of Claude, Anthropic’s model.

- Meta et Google, two other US stalwarts, are also investing billions in this technology. Meta is developing its own open source models, such as LLaMA, to democratize access to generative AI, while Google is betting on Bard and its Vertex AI platform to rival solutions from OpenAI and Microsoft.

It is striking to note that these four dominant players are all based in the USA, reflecting American hegemony in this technological field. This situation raises questions about digital sovereignty and the ability of other regions, such as Europe, to position themselves in the face of these behemoths.

GPT 🇺🇸

(Version 3.5, 4, 4o)

💰 Microsoft : 13M$

Claude 🇺🇸

(Version 2, 2.1, 3, 3.5)

💰 Amazon : 4M$

Llama 🇺🇸

(Version 2, 3, 3.1)

Gemini 🇺🇸

(Version 1.0, 1.5)

Grok 🇺🇸

Mistral 🇫🇷

(Version : Mixtral)

Ernie 🇨🇳

AliceMind 🇨🇳

Yandex 🇷🇺

Apple, meanwhile, is distinguishing itself with its strategy: we no longer speak of “Artificial Intelligence” but rather of “Apple Intelligence”*. Currently available on iOS 18, i.e. on Iphones 15 Pro minimum*, they use so-called MLMs (Medium Language Models) like Ferret*, OpenELM*, and DCLM-7B*, to offer compact AI solutions focused on specific use cases. Unlike large LLMs, these models are adapted to directly execute localized tasks on Apple devices. This approach minimizes dependence on remote servers for simple queries, while leaving more complex tasks to OpenAI* models where necessary.

GPU chips, the lifeblood of the war

Another key factor in the market is the use of GPU chips, essential for driving LLMs. Nvidia* dominates this segment, having briefly surpassed the market capitalization of Apple and Microsoft in 2024*. Competitors are responding to this demand, and some customers, such as Microsoft, are developing their own AI chips* .

To give an idea of the importance of GPU chips, Llama 3.1, the first LLM model to be released on the market, required 16,000 H100 GPU chips to drive it, at a total cost of around €500 million.

Market size

Estimated at $5.2 billion* in 2024, the AI market is up +7.5% on 2023. As a result, the size of the market is colossal, and could even reach 100 billion by 2028*. Yet, contrary to appearances, profitability remains a challenge: OpenAI and Anthropic, for example, are still posting substantial annual losses* . The early days of Microsoft Copilot also illustrated the financial challenges of generative AI, with early versions whose costs exceeded the revenues generated*. At the same time, some sectors, notably consulting, are taking full advantage. For example, IBM recorded a $1 million* gain in its consulting business thanks to generative AI, a sign of the positive impact of these technologies for companies that integrate them strategically.

The Future of LLMs

Bubble or no bubble?

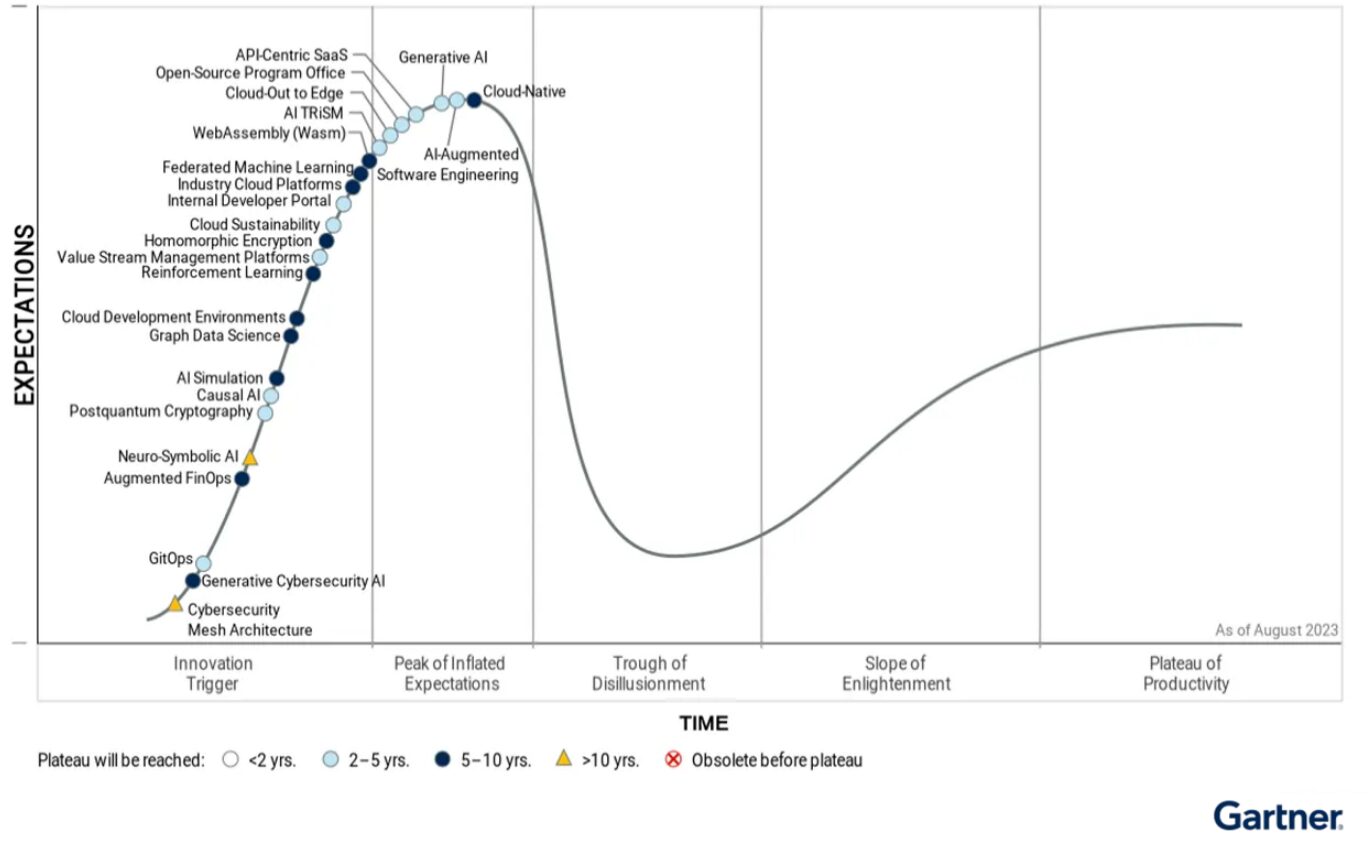

As investment pours in, one question persists: will AI solve problems to the tune of the $1,000 billion being invested? The “Magnificent 7” – Apple, Nvidia, Alphabet, Meta, Microsoft, Amazon and Tesla – are spending billions on AI projects, despite a significant gap between operating costs and current revenues. According to the Gartner Hype Curve, we’ve reached the peak of the bubble, but these giants still have solid balance sheets to support the future growth and profitability of AI.

Source : Courbe du hype de Gartner

Other avenues of exploration around LLM models

Creating high-performance language models is becoming increasingly complex and costly. As a result, attention is now turning towards smaller, more specialized solutions, rather than continuing to develop ever-larger LLMs.

Here are a few areas of innovation to consider:

- Compact models: SLMs* (Small Langage Models) and MLMs* (Medium Langage Models) are being explored, to offer targeted performance while reducing costs. Companies such as Apple (with the integration of models in iPhones*) and Microsoft (with phi3*), are turning their attention to this type of model.

- Specialized models: Focused on specific tasks to deliver greater efficiency and profitability, these models are designed for specific sectors. Example:

– Meditron*, designed by Meta, to help healthcare professionals make clinical decisions and diagnoses.

– Florence2*, for image recognition.

– Spreadcheet*, for data processing in spreadsheets. - Multi-modal models*: Capable of processing text, image and sound, these models enable richer applications, such as medical image analysis with text descriptions, or audio interpretation for security.

- Enhanced code generation*: LLMs evolve to produce more accurate and optimized code, accelerating software development and supporting technical teams.

- Enhanced security and confidentiality*: New approaches are emerging, such as data decentralization and advanced encryption, to guarantee confidentiality and reduce the risk of misuse of data processed by models.

- Customization*:Tailoring models to specific business needs makes them more relevant and effective for targeted applications.

Google & the generative AI industry

The online search industry is in the throes of change, under the growing influence of generative AI. Google is still the world leader, with over 90%* market share, but faces growing competition from alternative engines (DuckDuckGo, Baidu, Yandex… ). The rise of LLM, notably with ChatGPT, has changed the industry, even prompting Bing to integrate this technology to strengthen its position. Despite persistently high advertising revenues, Google is seeing a decline in profits on its partner sites, a sign of a possible shift towards AI-based search technologies. Faced with this revolution, investment in generative AI has exploded, with $13 billion invested by 2023, redefining the online search landscape and forcing the giants to adapt.

Dydu and generative AI

By combining Dydu’s expertise with the capabilities of LLMs, our solutions succeed in overcoming some of the current limitations of these models, such as the reliability of responses, the lack of ability to ask for clarification, or processing costs and times. This synergy maximizes their potential while meeting operational and strategic requirements. At Dydu, we believe in a hybrid approach, where the knowledge base management of a bot is combined with the text generation power of an LLM.

Our uses include :

- Knowledge base creation: we use language models to generate knowledge bases more rapidly, from data bases, to facilitate their enrichment and continuous updating.

- Parallel NLU: Natural Language Understanding (NLU) is a key technology for understanding and analyzing user intentions. We combine Dydu’s algorithms with the capabilities of LLMs, to improve understanding of complex or ambiguous queries, while increasing the accuracy of responses.

- Visit RAG method: this method consists of enriching text generation by LLMs by connecting them to specific databases or sources of information. Before producing an answer, the model retrieves reliable and relevant data from a dedicated knowledge base.

This enables us to provide our users with more precise, up-to-date and relevant answers.

Through our positioning, we encourage our employees to use these technologies wisely, taking care to limit their carbon footprint and maximize their economic efficiency for our customers.

As the LLM market evolves, we remain attentive to the ecological and financial challenges of generative AI. With the targeted integration of generative AI, we ensure that we respond precisely and individually to the specific needs of each of our customers.

👉 To rediscover the first parts of the “Focus Generative AI” series:

(Monitoring and report by Mathieu Changeat)